The Virtues of Nuclear Ignorance

Americans of a certain age are well acquainted with one of Ronald Reagan’s pithier maxims: “Trust, but verify.” It is a translation of a Russian proverb, and Reagan used it often in his dealings with the Soviet Union over nuclear disarmament, as both countries chipped away tentatively at their Cold War stockpiles. For him, the saying encapsulated the idea that a good treaty would require rigorous inspections, to insure that neither side was dragging its feet or, worse, disposing of decoy bombs, rather than real ones. But how should the process of verification work? If a U.S. scientist were allowed to dismantle a Russian warhead personally, he or she could identify it as legitimate fairly easily, even if it differed in design from American models. But, in doing so, the scientist would necessarily learn many classified details about the weapon, something that the Russians would never consent to. (Nor would the Americans, if their roles were swapped.)

This problem has shaped the form of arms-control agreements. Typically, they have focussed not on warheads but on more visible nuclear infrastructure: missile silos, submarines, and bombers. To prove that one of these so-called delivery vehicles has been taken out of commission, all a superpower needs to do is destroy it—blow up the silo, scuttle the sub, de-wing the bomber—and lay the pieces out for its rival’s satellites to see. (In Arizona, there is an Air Force facility where guillotined B-52s lie rusting in the desert.) The options available for verifying warheads are significantly less clear-cut. You can’t rely on things like weight or radiation profile alone, since they can be faked, and even the simplest measurements can reveal design secrets. In the past few decades, government researchers have developed other methods, most of which rely on what is known as an information barrier—a computer that obscures or forgets classified data on demand, telling its human user only “real” or “not real.” But this solution is imperfect, too, since there is always the possibility of a hack. A programmer could change the code so that the computer transmits its data, hides it for future recovery, or reports a hoax object as real.

Alexander Glaser, the director of Princeton University’s Nuclear Futures Laboratory, a hybrid science and policy group, has been thinking about the question of verification for years. Born in West Germany, he became interested in nuclear weapons as an undergraduate, just before the Berlin Wall came down. “The Cold War was something you saw and experienced every day,” he told me. The science of verification appealed to him, he said, because it turned traditional physics “upside down” in a constructive, interesting, real-world way. One day in 2010, he discussed the subject over lunch with Princeton’s dean of faculty. Glaser admitted that he wasn’t quite sure where to start. The dean, a computer scientist, noted that the problem had all the hallmarks of a zero-knowledge proof, a cryptographic concept dating back to the mid-eighties.

Suppose that you and a friend are standing in front of a pine tree. Your friend claims to have a quick way of knowing how many needles are on it—several hundred thousand, say—but doesn’t want to reveal either the exact number of needles or his method of counting. To test whether he’s being truthful, you have the friend privately count all the needles on the tree, and then you take a handful of needles and tally them up, keeping the total to yourself. Your friend counts the needles on the tree again, then tells you the difference between the first and second numbers. If he really does know how many needles are on the tree, then he also knows how many are in your hand. He has proved he wasn’t bluffing, but at the same time he has avoided divulging anything new to you. And there is no plausible way your friend could fake the result, other than just guessing, which would be easily ferreted out if you played the game a few times in succession with different handfuls of needles. In the cryptologic jargon, the proof is both complete (proving positive knowledge) and sound (cheating is detectable).

For Glaser, the idea was a revelation. He began trying to conceive of a zero-knowledge proof for nuclear-weapons verification, teaming up with his colleague Robert Goldston, a plasma physicist, and the computer scientist Boaz Barak, who at the time worked for Microsoft Research and is now a professor at Harvard. Together, they came up with a protocol, publishing it in 2014. At that point, though, the method was entirely theoretical. It took another physicist, a Princeton graduate student named Sébastien Philippe, to turn it into an experimental result. (Philippe is French, and his interest in nuclear weapons, like Glaser’s, is somewhat personal; his father served in the French Navy aboard a ballistic-missile submarine.) On Tuesday, the scientists published a description of their experiment in Nature Communications.

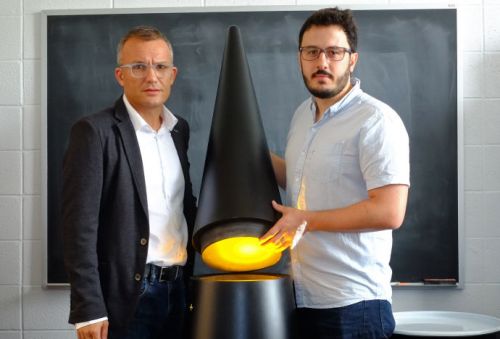

Their approach envisions a sort of game between the weapons inspector and his inspectee, the host country that owns the weapons being counted. It is known as an interactive proof, because it involves several rounds of querying. First, inspector and host establish a baseline detector reading. The detector, in this case, is an array of gel-filled tubes, developed by Francesco D’Errico, a radiologist at Yale. The tubes are sensitive to neutron radiation, which is one byproduct of a nuclear weapon. When neutrons pass through the gel, they leave tiny bubbles. The two players fire a neutron emitter at the detector for five minutes, then record how many bubbles they see. Let us assume, for simplicity’s sake, that there are a thousand. Now, like an Etch A Sketch, the detector is reset and the bubbles erased.